Partial network partitions are a peculiar type of network fault that disrupts communication between some but not all nodes in a computer cluster. And for what has recently been found to be a surprisingly catastrophic source of computer system failures, partial network partitions have not been studied comprehensively by computer scientists or network administrators.

The good news is a team of researchers at the Cheriton School of Computer Science has not only examined the problem in detail across many diverse computer systems, but the team has also proposed a “Nifty” solution to fix it.

To understand the scope and impact of this systems problem, recent master’s graduate Mohammed Alfatafta along with current master’s student Basil Alkhatib, PhD student Ahmed Alquraan, and their supervisor Professor Samer Al-Kiswany comprehensively examined 51 partial network partitioning failures across 12 popular cloud-based computer systems. Their data sources ranged from publicly accessible issue-tracking systems to blog posts to discussion on developer forums.

A cluster is a set of connected computers that work together in a way that makes them appear to a user as a single system.

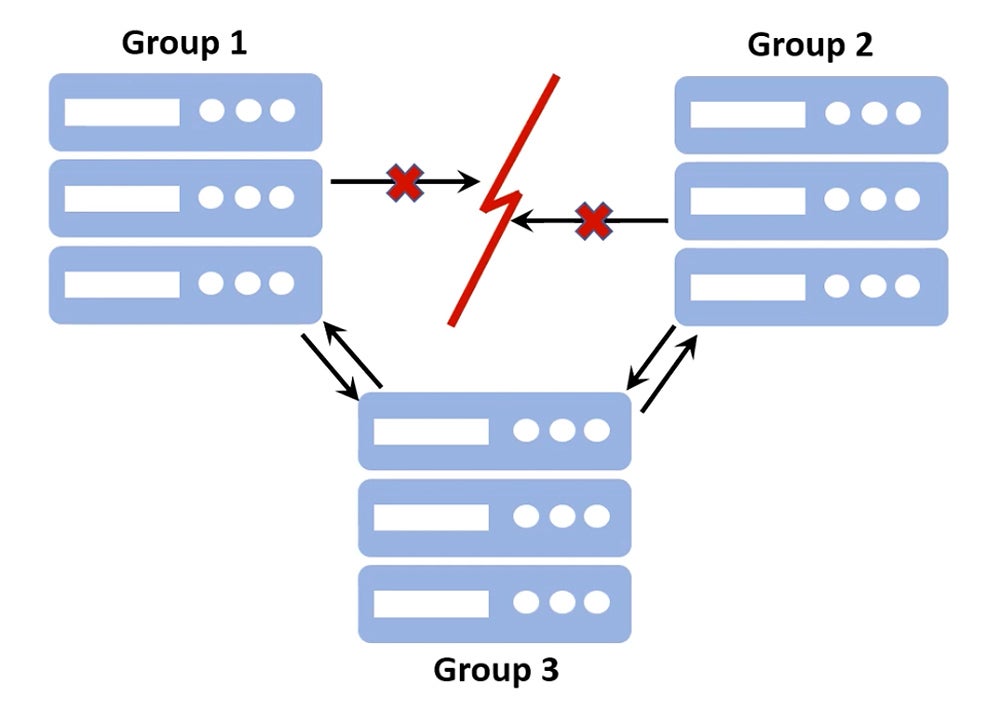

If a partial network partition occurs, groups of servers within a cluster are disconnected, while others are not. In this example, the computers comprising Group 1 can communicate with those of Group 3, and those of Group 2 can communicate with those of Group 3. But the Group 1 computers cannot communicate with the Group 2 computers. This partial partition leads to Group 1 seeing Group 2 as down and Group 2 seeing Group 1 as down. This often leads to both catastrophic and lasting impacts.

Even for researchers who delve into the intricacies of computer systems and networking, the results were unexpected. The team found that 75 percent of the failures they studied have a catastrophic impact that manifested as data loss, system unavailability, data corruption, stale and dirty reads, and data unavailability. They also discovered that 84 percent of the failures are silent — they don’t send users error or warning messages. The study further revealed that more than two-thirds (69 percent) of failures require three or fewer events to manifest, and that 24 percent of the failures were permanent, so even after fixing the partial partition the failure persists.

“These failures can result in shutting down a banking system for hours, losing your data or photos, search engines being buggy, some messages or emails getting lost or the loss of data from your database,” said Professor Al-Kiswany.

“Partial partitioning is a catastrophic failure that is easy to manifest and is deterministic, which means if a sequence of events happens, the failure is going to occur,” Professor Al-Kiswany continued. “We found that the partition in only one node was responsible for the manifestation of all failures, which is scary because even misconfiguring one firewall in a single node leads to a catastrophic failure.”

To fix the problem, the team of researchers developed a novel software-based approach, which they dubbed Nifty — an abbreviation for network partitioning fault-tolerance layer — to prevent these system failures. Nifty is both simple and transparent.

To help ensure wide adoption by data centres, Nifty had three goals — for it to be system agnostic, in other words, that it can be deployed in any distributed system, for it to not require any changes to existing computer systems, and for it to impart negligible overhead.

“Our findings motivated us to build Nifty, a transparent communication layer that masks partial network partitions,” said Mohammed Alfatafta, the recent master’s graduate who led the project. “Nifty builds an overlay between nodes to detour signals around partial partitions. Our prototype evaluation with six popular systems shows that Nifty overcomes the shortcomings of current fault tolerance approaches and effectively masks partial partitions while imposing negligible overhead.”

While Nifty keeps the cluster connected, it may increase the load on bridge nodes, leading to lower system performance. “System designers who use Nifty can optimize the data or process placement or use a flow-control mechanism to reduce the load of bridge nodes,” Professor Al-Kiswany said. “To facilitate system-specific optimization, we’ve designed Nifty with an API to identify bridge nodes.”

To learn more about the research on which this feature is based, please see Mohammed Alfatafta’s virtual presentation at OSDI '20, the 14th USENIX Symposium on Operating Systems Design and Implementation.

Toward a Generic Fault Tolerance Technique for Partial Network Partitioning

Please also see Mohammed Alfatafta, Basil Alkhatib, Ahmed Alquraan, Samer Al-Kiswany, “Toward a Generic Fault Tolerance Technique for Partial Network Partitioning,” 14th USENIX Symposium on Operating Systems Design and Implementation, 2020.

Nifty source code

Nifty’s source code is freely available at https://github.com/UWASL/NIFTY.

Usenix Artifact Evaluation Committee

At submission time, the authors of a study can choose the criteria by which their contribution, or “artifact” as it is called, will be evaluated. The criteria correspond to three separate badges that can be awarded to a paper. This study received all three Usenix Artifact Evaluation Committee badges —essential criteria that establish the availability, functionality and real-world impact of the research.